In EEG research the latency of a component (hidden in the ERP) is conventionally measured as the time when the ERP is most extreme. However, this method is not well warranted, as should be clear to anyone who have read Steven Lucks excellent chapters on ERP analysis in “An Introduction to the Event Related Potential Technique”. Instead he proposes a 50% area latency method: the latency is the point in time where the area under the curve is equal on both sides. It yields the same latencies as peak-measures on symmetrical ERPs and more correct latencies on non-symmetrical ERPs, where peak-measures fails.

A first solution

If you have your ERP as an array in MATLAB, you can compute the 50% area in this way:

erp = [1,2,3,4,5,6,7,8,9,7,4,2,-2,3]; #ERP data, one point for each sample.

[~,latency_index] = min(abs(cumsum(erp)-sum(erp)/2)); #Index in array that best divides it into 50-percent area

To use it with EEGLAB (assuming you have your epoched EEG structure) you might implement it in this way:

#Get the ERP waveform as erp_data. Insert the channel-number, e.g. 5 for Fz.

[~,~,~,~,~,erp] = pop_erpimage(EEG,1, channel, [], [], 1, 1, {}, [], '', 'erp','on','noshow','on');

#Only use the part of the ERP that represents the component you're analyzing, e.g.

samples = 100:200;

erp = erp(samples);

#Get the index in the ERP, as above.

[~,latency_index] = min(abs(cumsum(erp)-sum(erp)/2))

#Get the index in milliseconds from stimulus onset.

latency_ms = (EEG.xmin + (samples(1)+latency_index)/EEG.srate)*1000;

How it works

Let’s have a look at how this works. Remember that we want to find the sample where the area is equal on both sides. It consists of the following steps:

- Transform the erp array into an array of cumulative sums, i.e. every element is the sum of all previous elements. E.g. the array erp=[1,3,4,0] becomes erp_cumsum = [1,4,8,8]. (try to imagine this ERP to ease your understanding). Each element then corresponds to the area that precede it in the ERP. The last element corresponds the total area of the ERP.

- Now the job is simply to find the midmost value in erp_cumsum, i.e. the value that separates the area into two equal parts. To do this, we subtract the half area from every element in erp_cumsum. Continuing the example from above, sum(erp)/2 = 4. The subtraction giveserp_reduced = erp_cumsum-sum(erp)/2 = [-3,0,5,5].

- The midmost value in erp_cumsum is now the element closest to zero (we can already see that this is sample number 2). Finding it in matlab is done by taking the absolute value, which gives erp_abs = abs(erp_reduced) = [3,0,5,5], and finding the index of the minimum value. This is simply [~,index] = min(erp_abs).

Correction: defining the direction of the component

This method is not fool-proof but things can be done to prevent some errors. Consider a positive ERP (e.g. P300) that starts and ends at negative values, .e.g erp = [-3,0,5,2,-1,-4]. This is a very nice peak and visually you would consider it all to be part of the P300. However, using cumulative sums, the start and end would be “negative area”, which doesn’t make sense when we’re trying to find a latency.

The solution starts off by defining whether you’re looking for the latency of a positive or a negative peak. Then you adjust your ERP to only contain positive or negative values by raising or lowering the whole ERP so that all elements have the same sign. In this example, we’re looking for a positive component and then we raise the ERP by 4. If we were looking for a negative component, we’d lower the ERP by 5. So:

# For positive components:

erp_adjusted = erp-min(erp) = [1,4,9,6,3,0];

# For negative components:

erp_adjusted = erp-max(erp) = [-8,-5,-3,0,-6,-9];

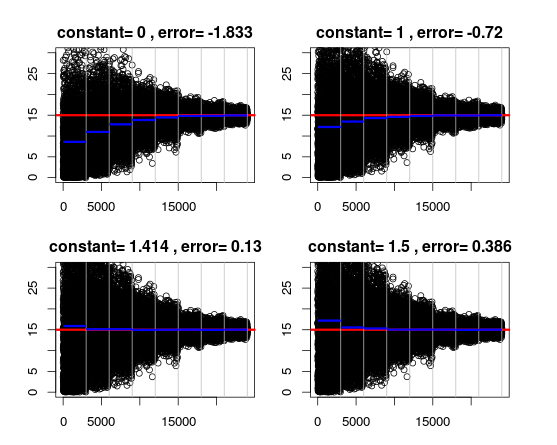

Extending it to multiple ERPs

Say you’re dealing with multiple ERPs and want to calculate 50% latencies on all of them. You want to put in e.g. 50 ERPs and get 50 latencies as output. This is practical if you want to calculate latencies on individual trials to investigate variability or on multiple subjects at the same time. This is where Matlab’s excellent matrix operations get to work. I don’t want to go into details on this one. I’m just going to give you the code for positive-going components in single-trial ERPs:

samples = 100:200;

erp_matrix = pop_erpimage(EEG,1, channel, [], [], 1, 1, {}, [], '','noshow','on');

erp_adjusted = bsxfun(@minus,erp_matrix(samples,:),min(erp_matrix(samples,:)));

[~,latency_indicies] = min(abs(bsxfun(@minus,cumsum(erp_adjusted),sum(erp_adjusted)/2)));

latencies = (EEG.xmin + (samples(1)+latency_indicies)/EEG.srate)*1000;